Yes

America has become weaker.

No

America has not become weaker.

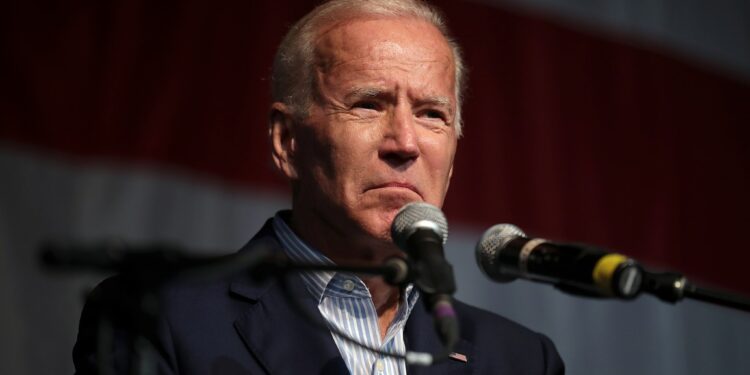

With the shape of the United States economy, military, infrastructure and more, a number of political experts believe that America has become weaker since Joe Biden came into the White House. Other Democrats strongly disagree with that. What do you think?