Yes

America has gotten worse.

No

America has gotten better.

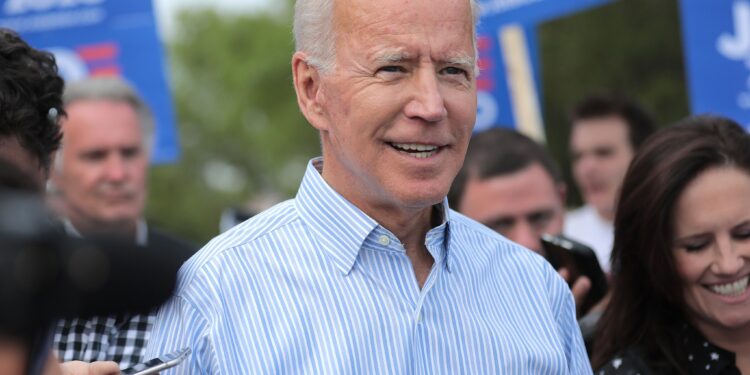

A number of Conservative media outlets are openly admitting that America has gotten worse since President Biden has come into office. Others however are claiming that Biden has made America better. What do you think?